Applying AI to Existing Platforms

|

Applying AI to Existing Platforms

From AI as a Service by Peter Elger and Eóin Shanaghy This article discusses how to add AI to existing platforms. |

You can save 40% off AI as a Service, as well as of all other Manning books and videos.

Just enter the code psflow40 at checkout when you buy from manning.com.

Contents

Integration Patterns for Serverless AI

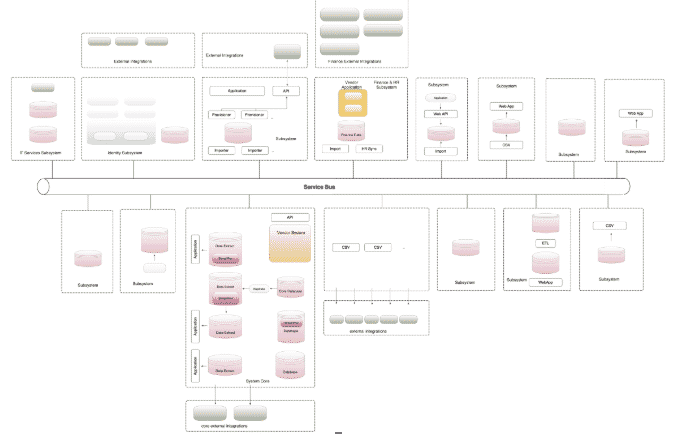

You can’t escape the fact that real-world enterprise computing is “messy”. For a medium to large enterprise, the technology estate is typically large, sprawling, and has often grown organically over time.

An organization’s compute infrastructure can be broken down along domain lines such as Finance, HR, Marketing, Line of Business systems, and so on. Each of these domains may be comprised of many systems from various vendors along with homegrown software and they usually mix legacy with more modern software as a service (SaaS-)delivered applications.

Concomitant to this, the various systems are typically operated in a hybrid model mixing on-premise, co-location, and cloud-based deployment. Furthermore, each of these operational elements must typically integrate with other systems both in the domain and outside of the domain. These integrations can be by way of batch ETL jobs, point-to-point connections or through some form of Enterprise Service Bus (ESB)

Apart from AI, there are a number of other technology trends as well that is said to take over the current technology.

ETL, Point to point and ESB

Enterprise system integration is a large topic area which we won’t cover here except to note that there are a number of ways in which the system can be connected together. For example, a company may need to export records from its HR database to match up with an expense tracking system. Enterprise Transform and Load (ETL) refers to the process of exporting records from one database, typically in CSV format, transforming and then loading into another database.

Another method of connecting systems is to use point-to-point integration, for example, some code can be created to call the API of one system and push data to another systems API. This depends on the provision of a suitable API. Over time the use of ETL and point-to-point integration can accumulate into a complex and difficult to manage the system.

An Enterprise Service Bus (ESB) is an attempt to manage this complexity by providing a central system over which these connections can take place. The ESB approach suffers from its own particular pathologies and often causes as many problems as it solves.

Figure1 illustrates a typical mid-size organization’s technology estate.

Figure 1. Typical enterprise technology estate, broken down by logical domain. This image is intended to illustrate the complex nature of a typical technology estate. The detail of the architecture is not important.

In this example, separate domains are connected together through a central bus. Within each domain, there are separate ETL and batch processes connecting the system together.

Needless to say, a description of all of this complexity is outside the scope of this article. The question we’ll concern ourselves with is how we can adopt Serverless AI in this environment. Fortunately, there are some simple patterns that we can follow to achieve our goals, but firstly let’s simplify the problem.

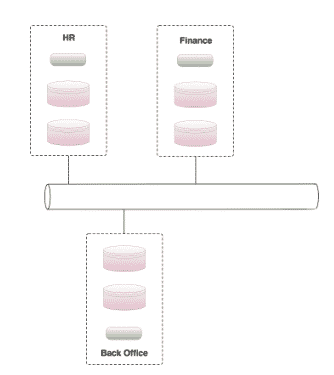

We’ll use Figure 2 to represent our “enterprise estate” in the following discussion, to allow us to treat the rest of our infrastructure as a black box. In the next section, we’ll examine four common patterns for connecting AI Services.

For example, if part of a companies’ business workflow requires proof of identity through the provision of a utility bill or passport, which can be provided as an AI-enabled service reducing the manual workload.

Another example is that of forecasting, many organizations need to plan ahead to predict their required levels of inventory or staff over a given period of time. AI services could be integrated into this process to build more sophisticated and accurate models saving the company money or opportunity costs.

Figure 2. Simplified Enterprise Representation

We’ll examine four approaches:

- Pattern 1: Synchronous API

- Pattern 2: Asynchronous API

- Pattern 3: VPN Stream in

- Pattern 4: VPN Fully connected streaming

Bear in mind that these approaches represent ways of getting the appropriate data into the required location to enable us to execute AI services to achieve a business goal.

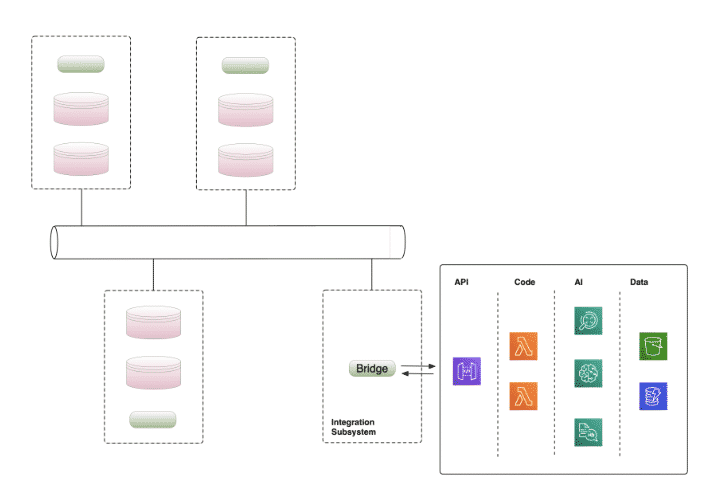

Pattern 1 Synchronous API

The first and simplest approach is to create a small system in isolation from the rest of the enterprise. Functionality is exposed through a secured API and accessed over the public internet. If a higher level of security is required then a VPN connection can be established through which the API can be called. This simple pattern is illustrated in Figure 3.

Figure 3. Integration Pattern 1 Synchronous API

In order to consume the service, a small piece of bridging code must be created in the estate to call the API and consume the results of the service. This pattern is appropriate when results can be obtained quickly and the API is called in a request/response manner.

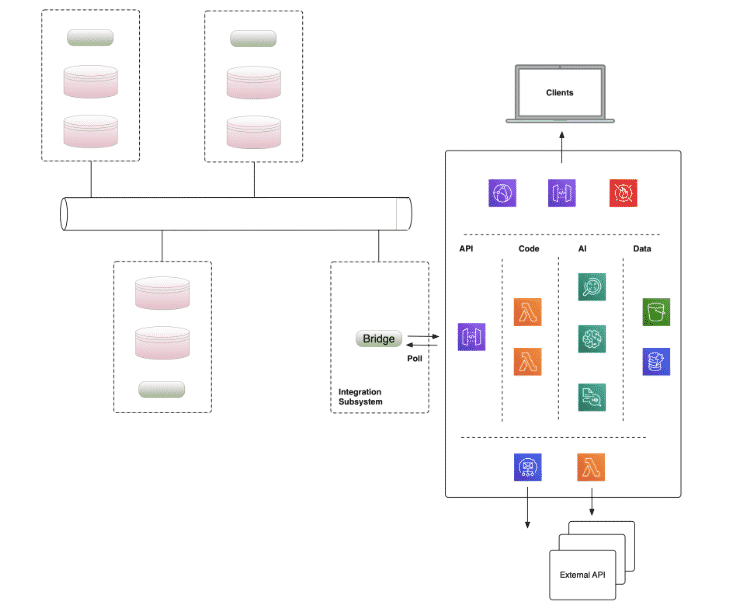

Pattern 2 Asynchronous API

Our second pattern is similar, in that we expose functionality through an API, but in this case, the API acts asynchronously. This pattern, which is appropriate for longer running AI services, is illustrated in Figure 4.

Figure 4. Integration Pattern 2 Asynchronous API

Under this “fire and forget” model, the bridge code calls the API but doesn’t receive results immediately, apart from status information. An example of this might be a document classification system that processes a large volume of text. The outputs of the system can potentially be consumed by the wider enterprise in a number of ways:

- Constructing a web application that users can interact with to see results.

- By the system messaging the results through email or other channels.

- By the system calling an external API to forward on details of any analysis

- With the help of bridge code polling the API for results

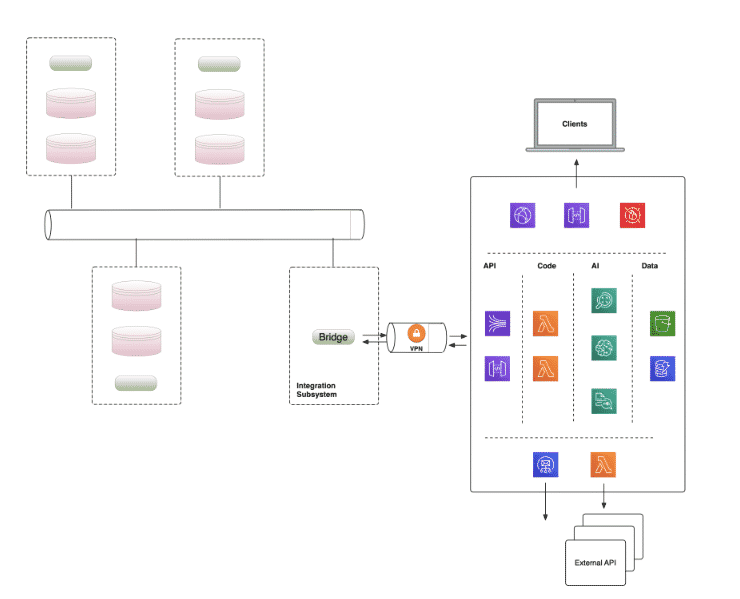

Pattern 3 VPN Stream In

A third approach is to connect the estate to cloud services through a VPN. Once a secure connection is established the bridge code can interact more directly with cloud service. For example, rather than using an API gateway to access the system, the bridge code could stream data directly into a Kinesis pipeline.

Results can be accessed in a number of ways; through an API, via outbound messaging, through a web GUI or via an output stream. This is illustrated in Figure 5.

Figure 5. Integration Pattern 3 Stream In

VPN

A Virtual Private Network (VPN) can be used to provide a secure network connection between devices or networks. VPNs typically use the IPSec protocol suite to provide authentication, authorization, and secure encrypted communications. By using IPSec, insecure protocols such as those used for file sharing can be used securely between remote nodes.

A VPN can be used to provide secure access to a corporate network for remote workers or to securely connect a corporate network into the cloud. Although there are a number of ways to set up and configure a VPN, we recommend a serverless approach using the AWS VPN service.

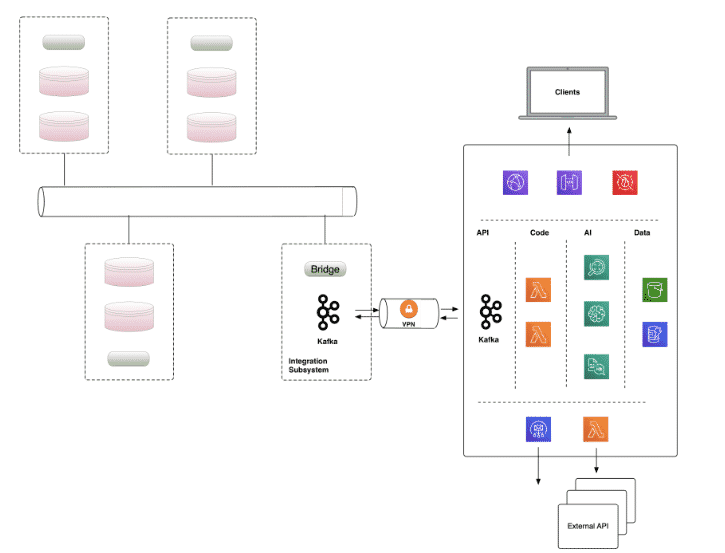

Pattern 4 VPN Fully Connected Streaming

Our final pattern involves a much deeper connection between the estate and cloud AI services. Under this model, we establish a VPN connection as before and use it to stream data in both directions. Although there are several streaming technologies available, we’ve had good results using Apache Kafka. This is illustrated in Figure 6.

Figure 6. Integration Pattern 4 Full Streaming

This approach involves operating a Kafka cluster on both ends of the VPN and replicating data between the clusters. Within the cloud environment services consume data by pulling from the appropriate Kafka topics and place results back onto a different topic for consumption by the wider enterprise.

Kafka

Apache Kafka is an open-source, distributed streaming platform. Kafka was originally developed at LinkedIn and later donated to the Apache Foundation. Although there are other streaming technologies available, Kafka’s design is quite unique in that it’s implemented as a distributed commit log.

Kafka is increasingly being adopted in high throughput data streaming scenarios by companies such as Netflix and Uber. It’s possible to install, run, and manage your own Kafka cluster, but we recommend that you take the serverless approach and adopt a system such as AWS Managed Streaming for Kafka (MSK).

A full discussion of the merits of this approach and Kafka in general is outside the scope of this article. If you aren’t familiar with Kafka we recommend that you take a look at the Manning book Kafka in Action to get up to speed.

Which Pattern?

As with all architectural decisions, the approach to take depends on the use case. Our guiding principle is to keep things as simple as possible. If a simple API integration achieves the goal then go with that. If over time the external API set begins to grow then consider changing the integration model to a streaming solution to avoid the proliferation of APIs. The key point is to keep the integration to AI services under constant review and be prepared to refactor as needs dictate.

Table 1 summarizes the context and when each pattern should be applied.

| Pattern | Context | Example |

| 1 – Synchronous API | Single Service, fast response | Text Extraction from the document |

| 2 – Asynchronous API | Single Service, longer running | Document transcription |

| 3 – VPN Stream In | Multiple services result for human consumption | Sentiment analysis pipeline |

| 4 – VPN Fully

Connected |

Multiple services result for machine consumption | Batch translation of documents |

Table 1. Applicability of AI as a Service legacy integration patterns

That’s all for now.

If you want to learn more about the book, check it out on our browser-based live book reader here.